The Silver Challenge - Lecture 4

It’s Day 4 of the Silver Challenge. I am going to write a very brief post today, because I just wanted to talk about bootstrapping. I have never understood what the term meant before, but Silver explained it quite nicely. Bootstrapping is used during policy evaluation to substitute the remainder of a trajectory with an estimate of what will happen from that point onwards [1]. Bootstrapping allows you to run your policy evaluation in real time because you do not need to watch the entire episode run before evaluating the policy - you can make an update part way through because you are making a prediction about the final outcome of that trajectory [1].

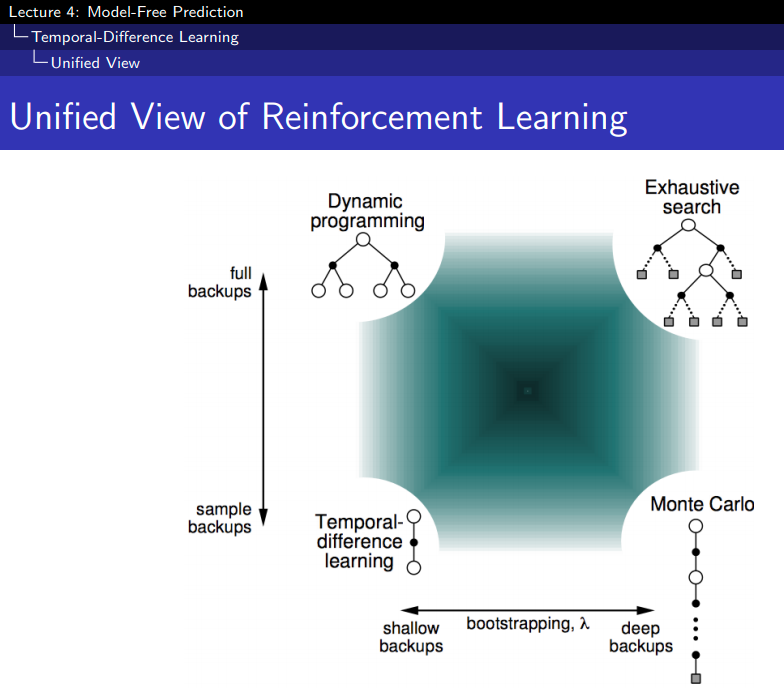

I also really liked the diagram, shown below of the distribution of approaches to model-free prediction in reinforcement learning.

Figure 1 - Source: [2]

References:

[1] Silver, D. “RL Course by David Silver - Lecture 4: Model-Free Prediction.” YouTube. 13 May 2015. https://www.youtube.com/watch?v=PnHCvfgC_ZA&list=PLqYmG7hTraZDM-OYHWgPebj2MfCFzFObQ&index=4 Visited 09 Aug 2020.

[2] Silver, D. “Lecture 4: Model-Free Prediction.” https://www.davidsilver.uk/wp-content/uploads/2020/03/MC-TD.pdf Visited 09 Aug 2020.