The Silver Challenge - Lecture 6

Today’s lecture was on value function approximation, which was good but, I have to be honest, not quite as ground-breaking as some of the other lectures we’ve seen so far. The key idea that I took away from this lecture was that we can think of learning the value function approximation as a supervised learning problem, but replace the training data supervisor with a target provided by our different policy evaluation approaches (Monte Carlo, TD(0) and TD-lambda) [1].

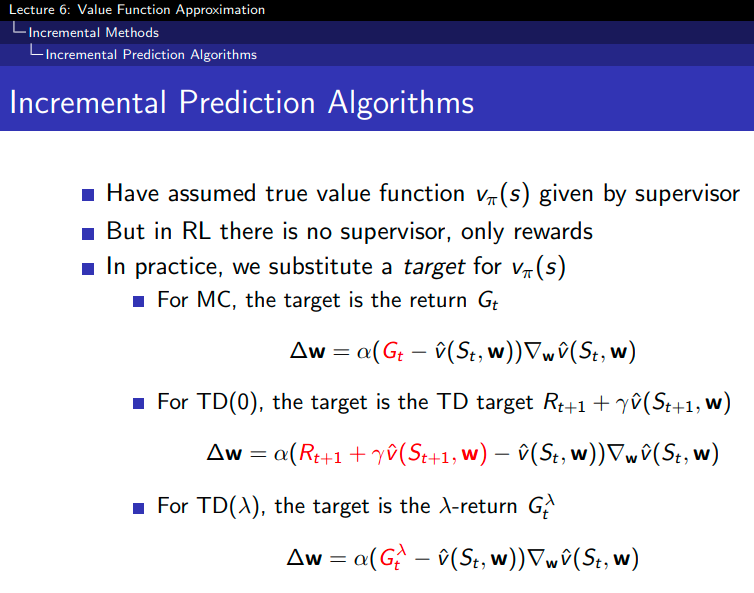

Silver summarized this idea pretty well on this slide:

Figure 1 - Source: [2]

For each prediction method, we substitute a different target (in red) into the update equation for the parameters of the function approximator [1]. Note that for each prediction method, we are showing the update equation for the non-linear case [1]. The linear case can be simplified somewhat in comparison to this expression [1].

In this lecture, Silver also presented the results from the DQN paper (hilariously it had just come out in Nature in the week prior to this lecture - time flies!) [1]. He explained the idea of having a target Q network in a way that I liked, saying that the DQN architecture has an active Q-network that is bootstrapping towards the “frozen” target Q network [1]. This helps stabilize the learning process, which Silver also took care to explain is not guaranteed to converge in the case of using nonlinear function approximators for TD learning methods [1].

References:

[1] Silver, D. “RL Course by David Silver - Lecture 6: Value Function Approximation.” YouTube. 13 May 2015. https://www.youtube.com/watch?v=UoPei5o4fps&list=PLqYmG7hTraZDM-OYHWgPebj2MfCFzFObQ&index=6 Visited 11 Aug 2020.

[2] Silver, D. “Lecture 6: Value Function Approximation.” https://www.davidsilver.uk/wp-content/uploads/2020/03/FA.pdf Visited 11 Aug 2020.