The Silver Challenge - Lecture 7

Today was such a weird lecture because we did not get to actually see Silver move around and point at things on the slides like we usually do. It added a weird little disconnect. Nevertheless, today’s lecture was very interesting because it covered policy gradient approaches to learning the optimal policy. This topic has been my focus recently as I have been learning about DDPG and other policy gradient methods for MolGAN. So although I have previously written about many of the topics he covered today, I wanted to share one “aha!” moment that I had, and a nice summary slide that Silver provided.

My “aha!” moment was in response to Silver’s discussion of the advantages of policy gradient-based reinforcement learning. He explained that policy gradient-based methods work well in continuous, high-dimensional action spaces [1]. I have heard this before, and the reason why policy-based methods work well in continuous, high-dimensional spaces is that you do not need to find the maximum action to optimize the policy [1]. Typically, finding the maximum action over all possible actions for a given state can become computationally intractable, especially as the action space becomes larger and we move towards a continuous action space [1]. But what I finally understood was that the reason why policy gradient-based methods are better is because they never try to find this maximally optimal action [1]. Instead, policy gradient-based methods are only computing the gradient of the policy, and moving us up that gradient towards the optimal policy [1]. Computing the gradient, it turns out, is much more tractable and it works quite well in continuous environments, especially [1].

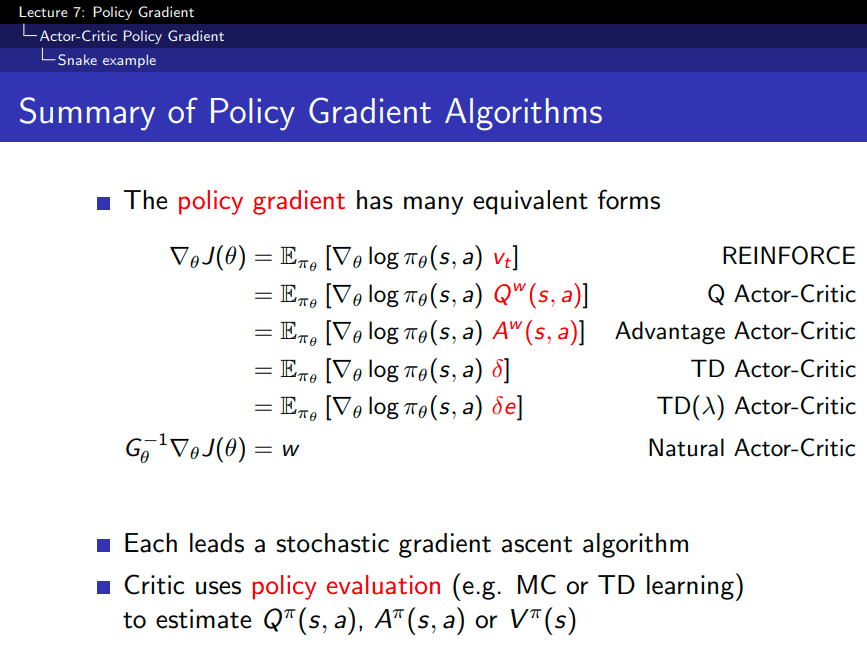

Secondly, Silver posted this slide summarizing many of the policy gradient algorithms that we went over in lecture, which I thought was quite helpful.

Figure 1 - Source: [2]

References:

[1] Silver, D. “RL Course by David Silver - Lecture 7: Policy Gradient Methods.” YouTube. 21 Dec 2015. https://www.youtube.com/watch?v=KHZVXao4qXs&list=PLqYmG7hTraZDM-OYHWgPebj2MfCFzFObQ&index=7 Visited 12 Aug 2020.

[2] Silver, D. “Lecture 7: Policy Gradient.” https://www.davidsilver.uk/wp-content/uploads/2020/03/pg.pdf Visited 12 Aug 2020.